Two kinds of censorship resistance

How privacy blockchains and circumvention tools are "censorship resistant" in very different ways

There is a pervasive narrative that decentralized, privacy protecting network protocols, such as privacy-focused blockchains like Monero and anonymous communication networks like Tor or Freenet, are "censorship resistant". Decentralization removes central points of control (like Web2 platforms) who can arbitrarily dictate how users use the protocol, while privacy techniques reduce visibility into exactly what these users are doing — and you "can't censor what you can't see". The end result, the story goes, is a platform that users can freely use towards diverse ends, protected from arbitrary limitations from centralized authorities.

This narrative, however, only applies to a particular kind of censorship. Systems like Freenet certainly are meaningfully more censorship-resistant than centralized platforms, but that is in a usage-centric sense — it's very difficult for attackers to "remove content from Freenet" or selectively prevent certain users from using it to communicate. This sort of censorship aims to ban certain uses of the protocol — let's uncreatively name it "Type-I" censorship. Most decentralized privacy protocols aim to be Type-I censorship-resistant.

But a different kind of censorship aims to deny use of the entire network protocol. This "Type-II" censorship is systems-centric rather than usage-centric, focused on banning tools rather than particular uses of them. Type-II censors' objectives look less like "block Monero transactions to this particular user" and more like "shut down all the Monero nodes" or "block Monero across a nation-state".

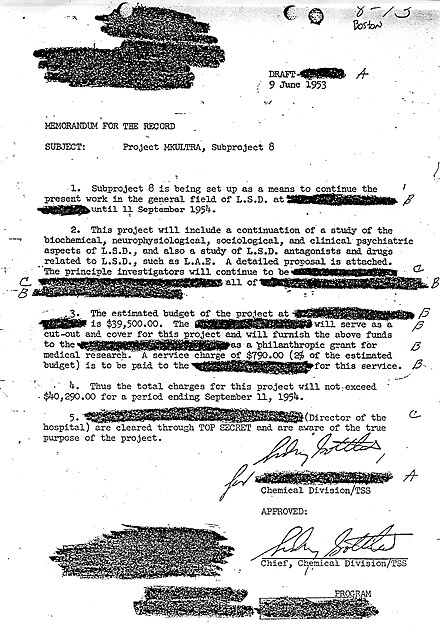

This is, of course, much scarier and harder to defend against. It's actually a little hard to formulate a threat model that's even possible to defend against — shutting down the whole Internet, for instance, is a foolproof strategy for a Type-II censor of any Internet protocol, and we need to carefully model our attacker's incentives and capabilities to rule out these high-collateral-damage strategies. Many "censorship-resistant decentralized networks" do not really deal with Type-II censorship at all, often due to a belief that a ban on all usage of a network protocol is unrealistically dystopian or simply unenforceable.

Unfortunately, Type-II censorship is a reality that billions of Internet users face every day. For instance, the Chinese censorship machine, though more famous for its often absurd attempts at Type-I censorship (e.g. banning "Xi Jinping looks like Winnie the Pooh" jokes), is in reality primarily an extensive and invasive Type-II censorship machine targeted precisely at Type-I censorship resistant systems. The "Great Firewall", besides blocking websites like Google and Twitter in a Type-I fashion, uses advanced technology, such as machine-learning based traffic classification, to shut down access to privacy/security-protecting protocols ranging from Tor and Nym to Resilio and Matrix.

And this censorship is frighteningly effective: "just use a VPN" is no answer against a censor especially designed to shut down systems like VPNs. Even self-hosted VPN traffic is usually quickly blocked by traffic classifiers, let alone mainstream VPN services whose servers have long been blacklisted.

Type-I and Type-II censorship resistance are orthogonal to each other

Interestingly, techniques used to prevent Type-I censorship vs Type-II censorship share very little in common. While decentralization and privacy through cryptography is almost a "silver bullet" solution for Type-I censorship resistance (since it becomes simply impossible to tell which messages should be censored, and which should not), Type-II censorship resistance requires much more creative solutions.

This is because while Type-I censorship resistance is solved through designing a network that cannot discriminate between different uses, Type-II censorship resistance is about a fundamentally harder problem: tricking underlying protocols and infrastructure that already discriminate between different uses to misclassify the censorship-resistant system as "innocent". In other words, while Type-I censorship resistance uses cryptography, Type-II uses steganography.

What does this look like in practice? Here are some examples of Type-II censorship-resistance techniques, often deployed in proxy tools like Geph and Tor:

Protocol obfuscation: There's a variety of techniques to encode "sensitive" traffic belonging to a censored tool (e.g. a VPN) in ways that confuses censors into labeling it as "innocent". Two main approaches are a) tunneling traffic over an "innocent" encrypted protocol like HTTPS — used by tools like meek and naiveproxy and b) scrambling traffic to look uniformly random and "unclassifiable", used in protocols like ScrambleSuit, obfs4, and sosistab2.

An interesting aspect of protocol obfuscation is resisting active probing attacks. Powerful censors like the Great Firewall routinely classify traffic not only by passively analyzing traffic, but also by actively talking to "suspects" and observing how they respond. For instance, an easy way to check whether a particular host is an OpenVPN server is simply to connect to it using an OpenVPN client. More sophisticated attacks inject bogus data into and observe how the communicating parties behave — the well-known "The Parrot is Dead" paper uses these attacks to thoroughly break a formerly common third kind of obfuscation that mimics features of innocent protocols.

Resisting active probing resistance is quite a bit trickier than hiding from passive classifiers — for instance, we often use specially designed cryptographic handshakes (as in ScrambleSuit) where the communicating parties are assumed to share a secret "cookie" that the adversary does not know.

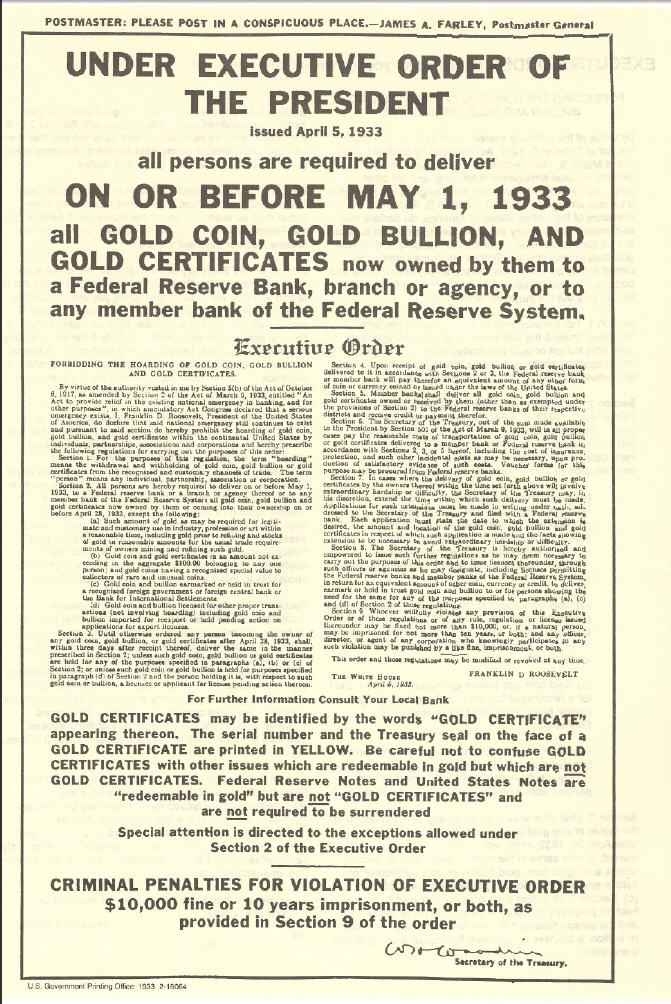

Collateral freedom: A fundamental limitation of most protocol obfuscation techniques is that they rely on information asymmetry between legitimate users and censors: if a censor somehow knows an obfuscated service's IP address — which a legitimate user needs to know — a simple blacklist will censor the service for good. This is usually unacceptable for public services, where censors can access the exact same information as real users.

A very effective technique for Type-II censorship resistance without information asymmetry is collateral freedom: tunneling traffic over an innocent third-party service rather than just an innocent protocol. These services, like CDNs, cloud storage providers, and social media platforms, are difficult to block without causing significant collateral damage. Censors face a dilemma: either allow massive censorship circumvention, or block the entire service and anger a large number of innocent users. Two examples of collateral freedom are Tor and many other proxy tools' use of CDNs like Fastly to tunnel traffic through domain fronting, as well as the wide use of code hosting services like GitHub and GitLab as GFW-proof forums by the Chinese dissident community — at least until recently, GitHub was considered too important to block due to its importance to the Chinese tech community.

One caveat, though, is that the entire concept relies on trusting a third party service to have Type-I censorship resistance, at least with respect to the censor in question. The examples mentioned would not work if the CCP controlled GitHub, or if CDNs ban use by censorship resistance tools (and unfortunately many do).

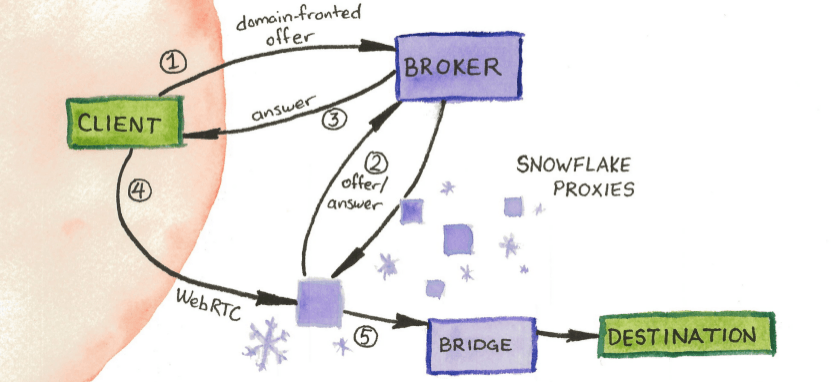

"Snowflake-like" bridge distribution: Tunneling data over third-party services is often slow and expensive. A more efficient way is to bootstrap users using collateral freedom into a main protocol that uses protocol obfuscation — an approach found in many state-of-the-art anti-censorship proxy tools, like Tor's Snowflake transport and Geph.

Here, a collateral-freedom channel is used to connect to a broker or binder server. This server then gives out information about "bridges" — entry points to the censorship-resistant network that use a strongly obfuscated protocol. Bridges are carefully distributed to users so that no one user can see all of the bridges.

The idea is that as long as the collateral-freedom broker stays accessible, and censors are unable to enumerate most of the bridges, most users would be able to access the system freely. Effectively, a trusted broker creates the information asymmetry required for protocol obfuscation.

What about both?

Unfortunately, combining Type-I and II censorship resistance is even harder than Type-II alone. There are important areas where resisting Type-I and II censorship are not just orthogonal to each other, but in fact in apparent conflict:

Decentralization vs centralization: Decentralization makes it harder to coordinate censorship policies, easier for users to evade censorious nodes, and is crucial for the Type-I censorship resistance of networks like privacy-focused blockchains and Freenet-like communication systems. Unfortunately, our best Type-II censorship resistance techniques rely on information asymmetry between "good guys" and "bad guys", as well as trusted parties like broker servers to carefully manage this asymmetry. It's not at all clear how these huge centralized authorities — including their potential for Type-I censorship — can be decentralized.

Privacy vs anti-Sybil systems: Privacy is an essential tool for Type-I censorship resistance, as it makes it impossible for adversaries to distinguish between different users and messages. On the other hand, effective Type-II censorship resistance requires powerful anti-Sybil techniques to protect the information asymmetry between censors and censored services. This often means privacy-weakening, nontransparent surveillance, such as bridge-distribution broker servers surveilling users, in order to ban users that are most likely responsible for leaking bridges to censors.

But many things truly need both sorts of censorship resistance. The parallel, decentralized, empowering internet that so many of us want must be internally Type-I censorship resistant while robust to Type-II censorship from without. And more interestingly, Type-II censorship can be used as a threat against systems aiming only at Type-I censorship — CDN providers with the meager Type-I censorship resistance of supporting domain fronting, for instance, quickly folded under pressure to internally ban domain fronting.

Building a robust "two-way" censorship resistant system, especially a decentralized one, seems to still be a very open problem. There's a great deal of additional thinking that needs to be done, probably equal or exceeding the magnitude of the advancements in zero-knowledge proofs and other privacy-protecting technology that allowed for strongly Type-I censorship resistant decentralized networks to emerge.

But I would nevertheless want to share two guesses as to how two-way censorship resistance might be possible:

Decentralized collateral freedom: can we build a decentralized network that has collateral freedom? If we achieve mass adoption of some Type-I censorship-resistant decentralized technology — say, a cryptocurrency — censoring all usage of that technology would be as hard as censoring centralized services used for current collateral-freedom systems. The challenge here, besides actually achieving mass adoption, seems to be that censors can simply mandate usage of a surveilled gateway to such a subversive network, and ban properly using the network. This achieves all their censorship goals without much collateral damage. In the case of cryptocurrency, for instance, nothing seems to prevent a regime like China from banning self-custody wallets, Type-II censoring all blockchain network traffic, and still participate in the cryptocurrency economy through "compliant" custodial wallets. It seems hard to design a system where such a gateway-based censorship machine will actually cause collateral damage.

Decentralized permissioned networks: can we decentralize the sort of permissioned, Sybil-resistant bootstrapping needed for information asymmetry between users and censors? This seems to be possible in "real life" — resistance movements in e.g. occupied countries in World War II appear analogous — but it's unclear how a non-transparent yet peer-to-peer and decentralized computer network can be scaled up and automated. Bootstrapping through real-world trust seems to not scale, for instance, since societies with strong Type-II censorship (e.g. China) are usually also low-trust societies devoid of much organization outside the control of the censoring state.

In any case, I have some other thoughts on decentralizing Type-II censorship resistance, but that would have to wait for future posts...